Jaan Tallinn is a founding engineer of Skype and Kazaa. He founded the Centre for the Study of Existential Risk and he is also a co-founder of the Future of Life Institute and philanthropically supports other existential risk research organizations.

He was recently interviewed by The Guardian on the topic of AI's potential to end human civilization. This is an excellent – albeit long – read, with some juicy quotes and insights. Here are some of my favourite snippets below:

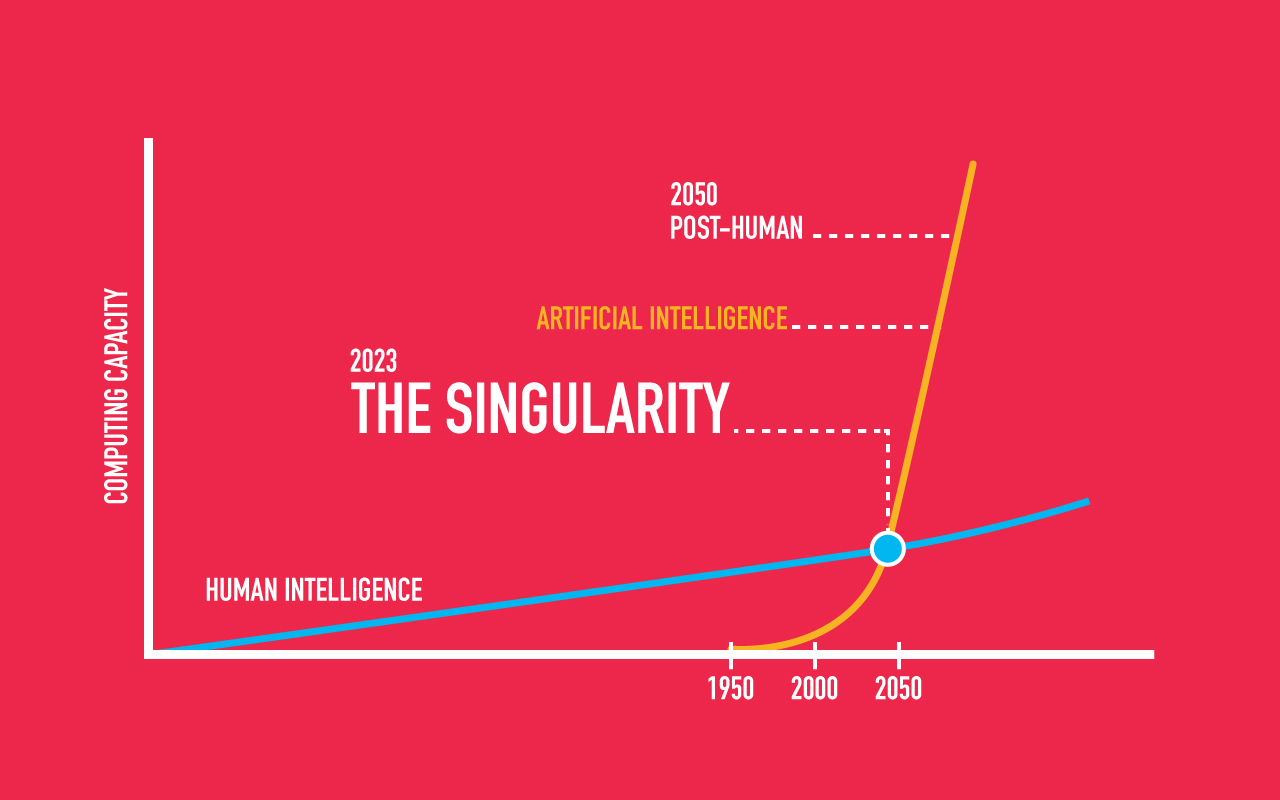

“I do agree that … preparing for the event of general AI surpassing human intelligence is one of the top tasks for humanity.”

“If the reward structure of a superhuman AI is not properly programmed, even benign objectives could have insidious ends.”

“Superintelligent AI brings unique threats. Ultimately, I hope that the AI community might follow the lead of the anti-nuclear movement in the 1940s. In the wake of the bombings of Hiroshima and Nagasaki, scientists banded together to try to limit further nuclear testing. “The Manhattan Project scientists could have said: ‘Look, we are doing innovation here, and innovation is always good, so let’s just plunge ahead… But they were more responsible than that.”

“Tallinn proposed not only walling off superintelligence in a holding tank – a physical structure – but also restricting it to answering questions, like a really smart Ouija board. The approach that most excites researchers is finding a way to make AI adhere to human values– not by programming them in, but by teaching AIs to learn them. In a world dominated by partisan politics, people often dwell on the ways in which our principles differ.”

When asked what success in AI safety may look like, Tallinn made reference to the Lego movie where “…everything is awesome.”

Guest post by The Futures Agency content curator Petervan

Other Resources