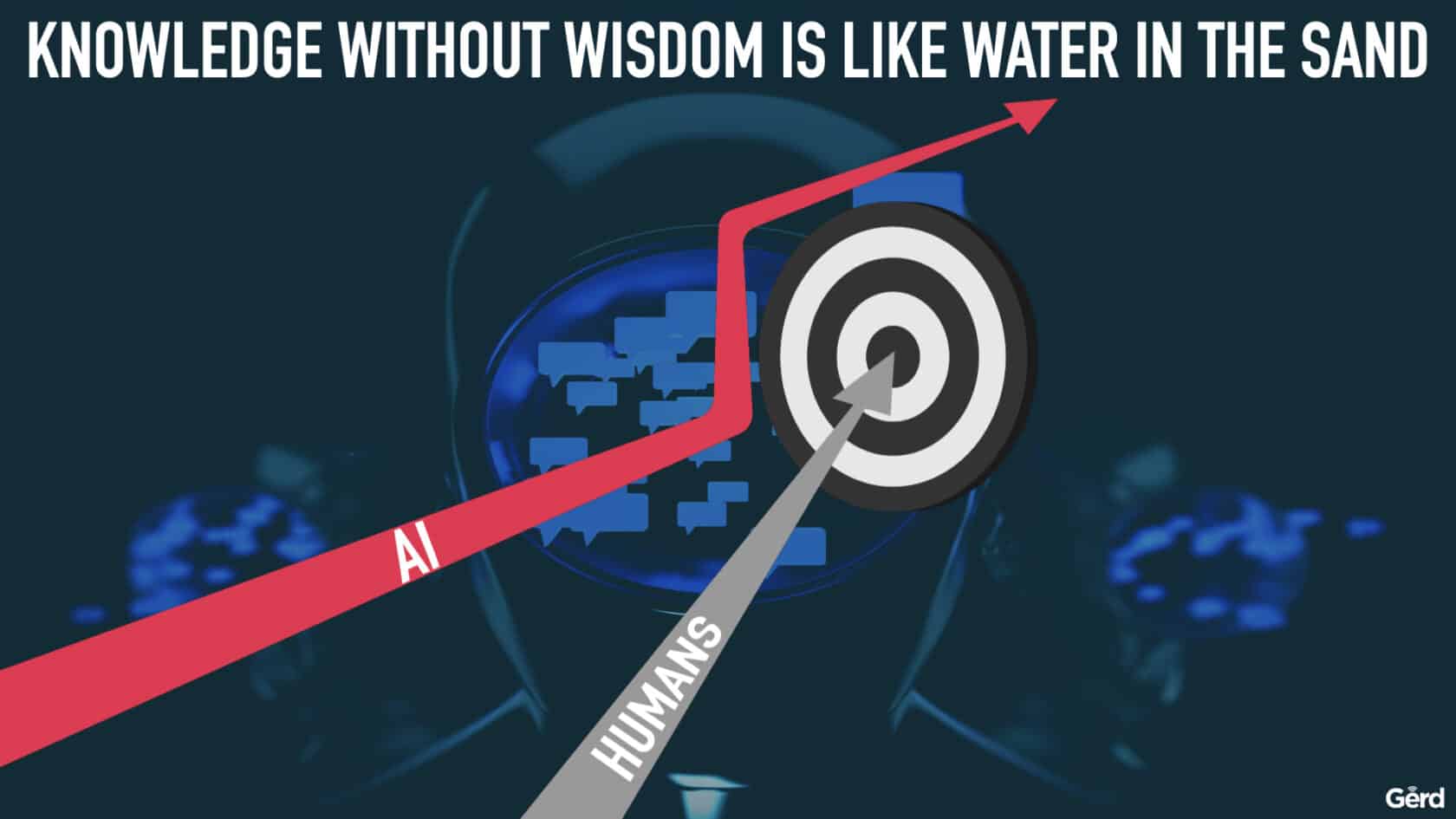

Generative AI, ChatGPT, Dalle2 et al: “Knowledge without wisdom is like water in the sand” – An excited yet critical commentary by Futurist Gerd Leonhard

* Check out my new speaking topic on GenerativeAI and ChatGPT and watch the livestream recording from my Jan30, 2023 livestream session on this topic (some of the images I used in the talk are in the gallery below)

Updated on April 1, 2023 – new videos below!

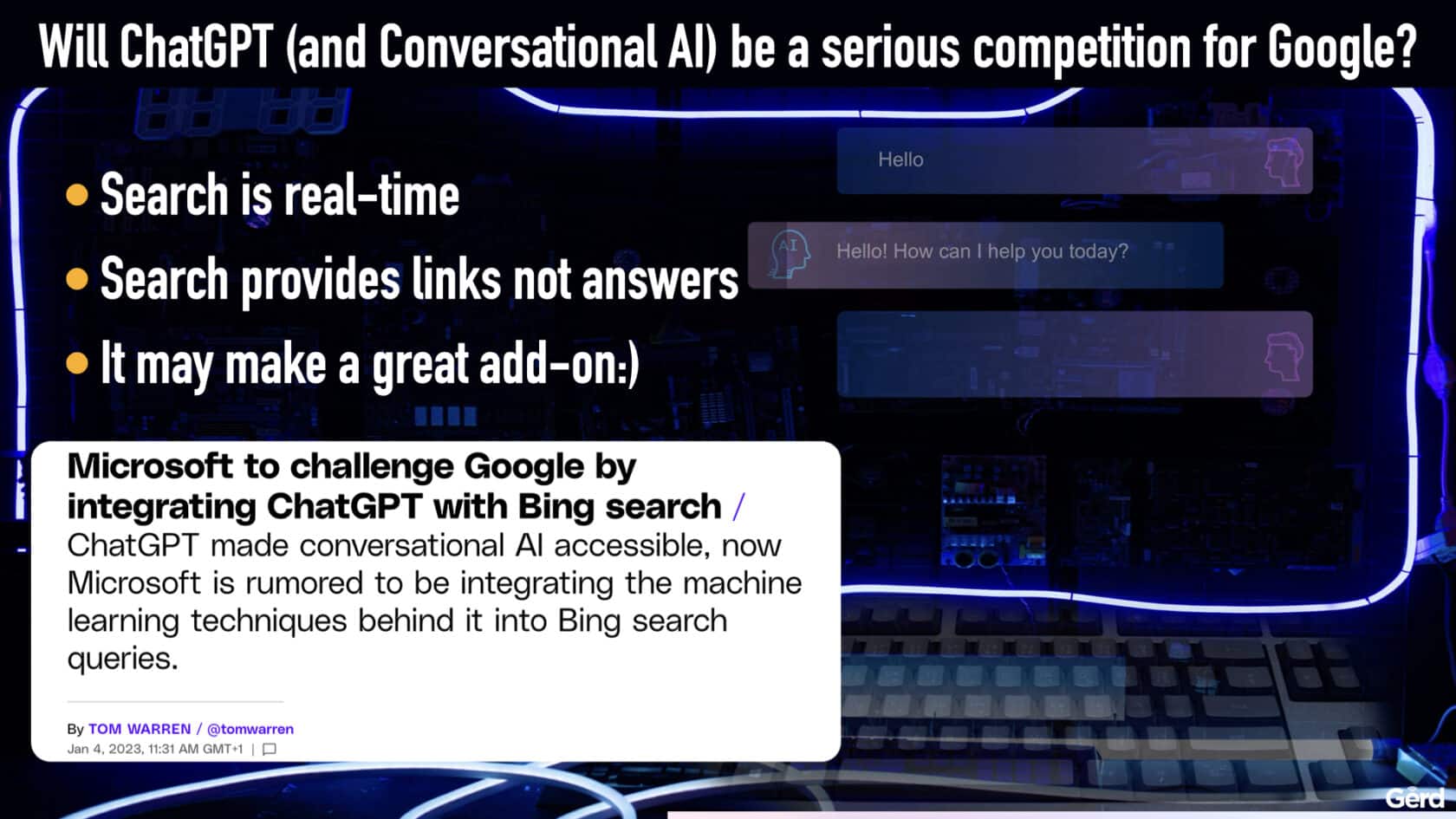

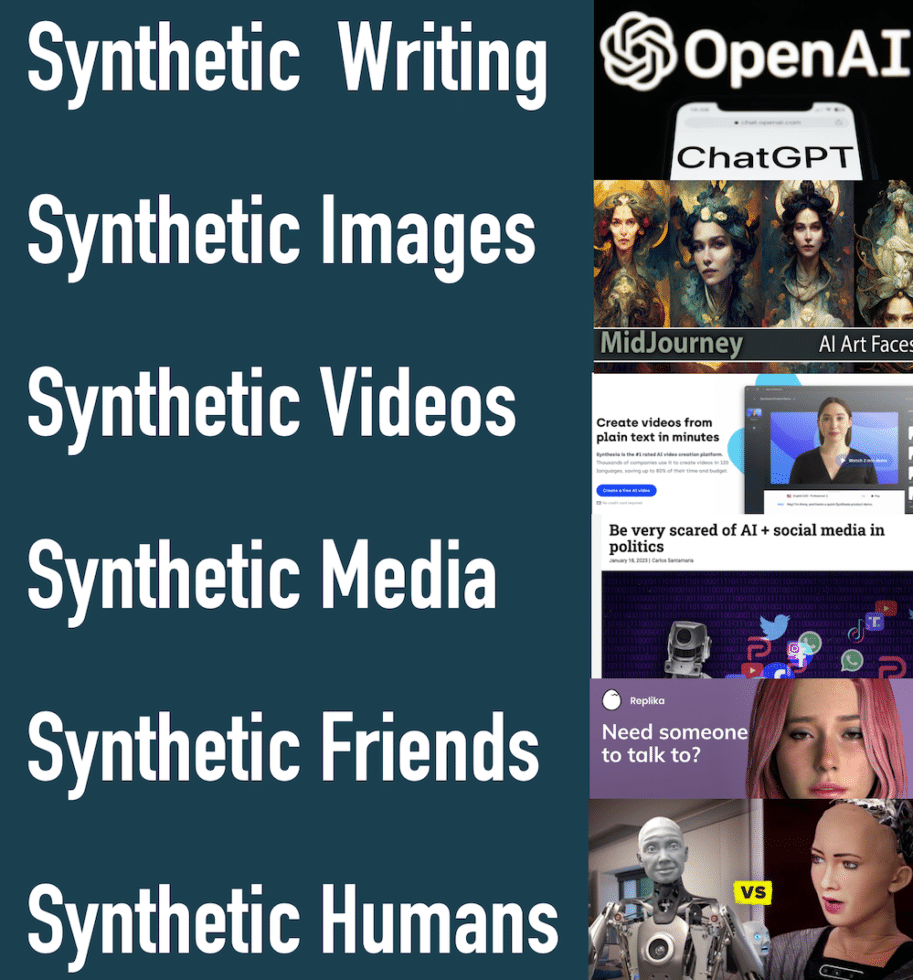

The buzz is incredible: Some pundits say Generative AI, and in particular ChatGPT, Dalle2, Midjourney and StableDiffusion, represent a revolution and will usher in the next edition of the web, similar to what happened when the mobile Internet arrived. Will ChatGPT be as big as the iPhone? Will this create the first real competitor for Google? Is that why MSFT is investing $10 Billion into OpenAI? More down to earth, does this spell the end of the college essay? Will ChatGPT boost plagiarism and general editorial laziness? Will we see a flood of (even more) meaningless ebooks generated more or less by AI? Will AI-writing apps spell the end of journalism as we know it (again)? Will we see even more synthetic ‘bullshit media‘, manipulation and noise. Like social media – but 500x as bad? Will generative AI make a great companion with the so-called Metaverse; so we can all enjoy living our perfect simulations? Is this a big step towards AGI and would that really mean ‘lights out' for humanity Or is it all just for fun?

First, a quick definition: “Generative artificial intelligence (AI) describes algorithms (such as ChatGPT) that can be used to create new content, including audio, code, images, text, simulations, and videos. Recent new breakthroughs in the field seem to have the potential to drastically change the way we approach content creation…”

Via Investopidea

Here are some of my comments, bottom lines, hopes, worries and concerns. Please note that for the moment I am ambivalent about the potential pros and cons – be sure to keep in-touch via my newsletter.

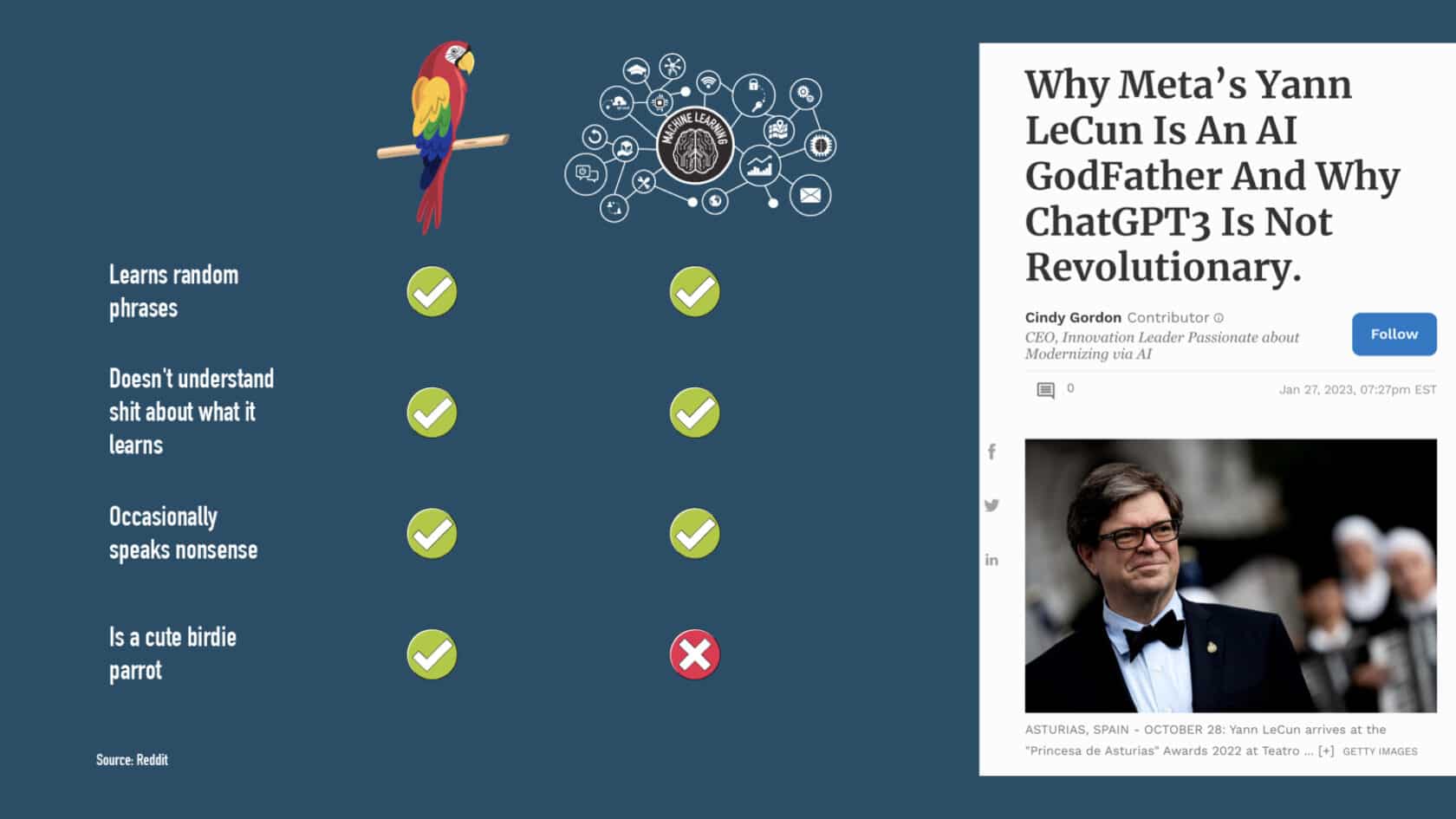

- It's not really that new: The science and methodology (in particular, deep learning) behind ChatGPT and other GenerativeAI isn't really that new (as Meta's Yann LeCun points out)- but having an open and public interface to try it (well… if it's not down), and APIs that tap into it, is a whole new dimension. Public responses range from grandiose excitement to harsh criticism to fear. Personally, I think it's a ballsy move by its creator, OpenAI, and it sure got the ball rolling for them:) But I'd venture to say it won't stay as openly available as it is right now as they have clearly stirred a hornet's nest here.

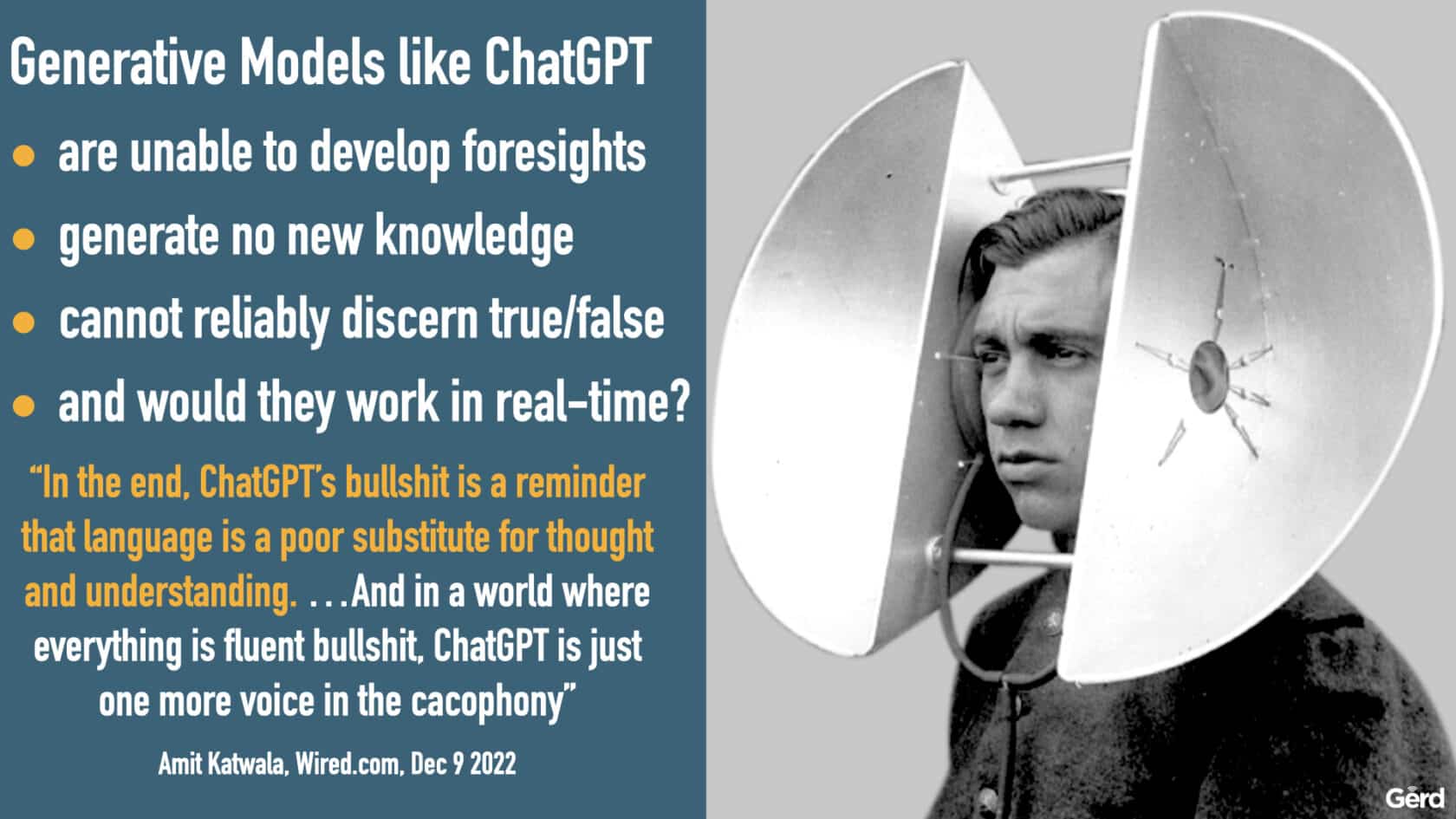

- It's impressive but most of the text GenerativeAI apps churn out still sound very ‘binary‘. ChatGPT in particular does a great job as far as the generation of simple texts (such as a real-estate listing) is concerned, but once you dive deeper into it, beyond the perfectly crafted word-collages, its shortcomings surface pretty quickly. Here are some of them:

- ChatGPT is not realtime (yet) – it's training data cuts off in 2021. You won't get any answers that relate to current affairs, news or – OMG that's my turf – future observations or foresights. I have no idea how OpenAI would make this work with real-time data, either – that would be 1000x of the current processing juice it would need, no?

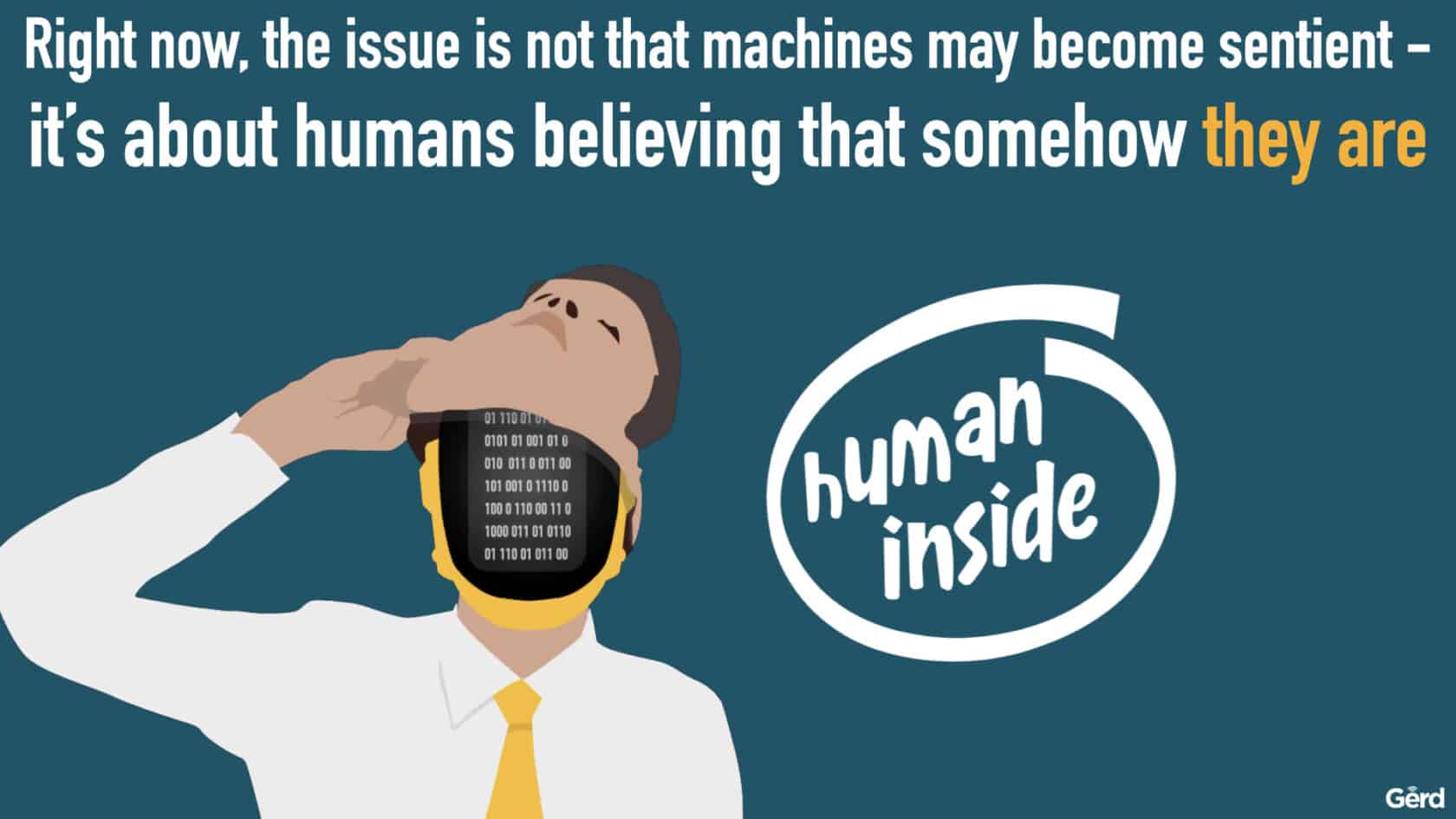

- The stochastic parrot problem: Since ChatGPT basically reassembles snippets, words and sentences from its training data as it attempts to quickly provide answers, it often gets confused about correct answers versus suggested truths (see the examples here) which indicates that discerning true versus false is currently not its strengths – it merely seeks to provide the best and most plausible answer as fast as possible. This has led to many experts labelling ChatGPT a fancy ‘stochastic parrot‘. Like a parrot, it really has no idea what it says – it just regurgitates the snippets it ‘remembers'. In other words, it may often sound very clever and accomplished but it's still just combining random facts, sometimes even resorting to meaningless sputterings. BUT: If ChatGPT and others like it can be used as very accomplished bullshitters, just think of the implication for social media! I shutter.

- I found the content that ChatGPT generates mostly quite generic and often a bit stiff, even though it is also quite adept at some kind of humour, and capable of drilling into details upon further probing. Impressive, indeed, but far from what I would consider the really powerful and unique writing that would be worth my time. I have, btw, dabbled with a pretty cool platform called Sudowrite which is using OpenAI's GPT3 API (I think) and is geared towards helping writers to discover new angles on what they are writing about. I found this very useful, indeed – it has helped me to writer better and faster, and overall much more fluid. But again, it may also encourage my laziness…

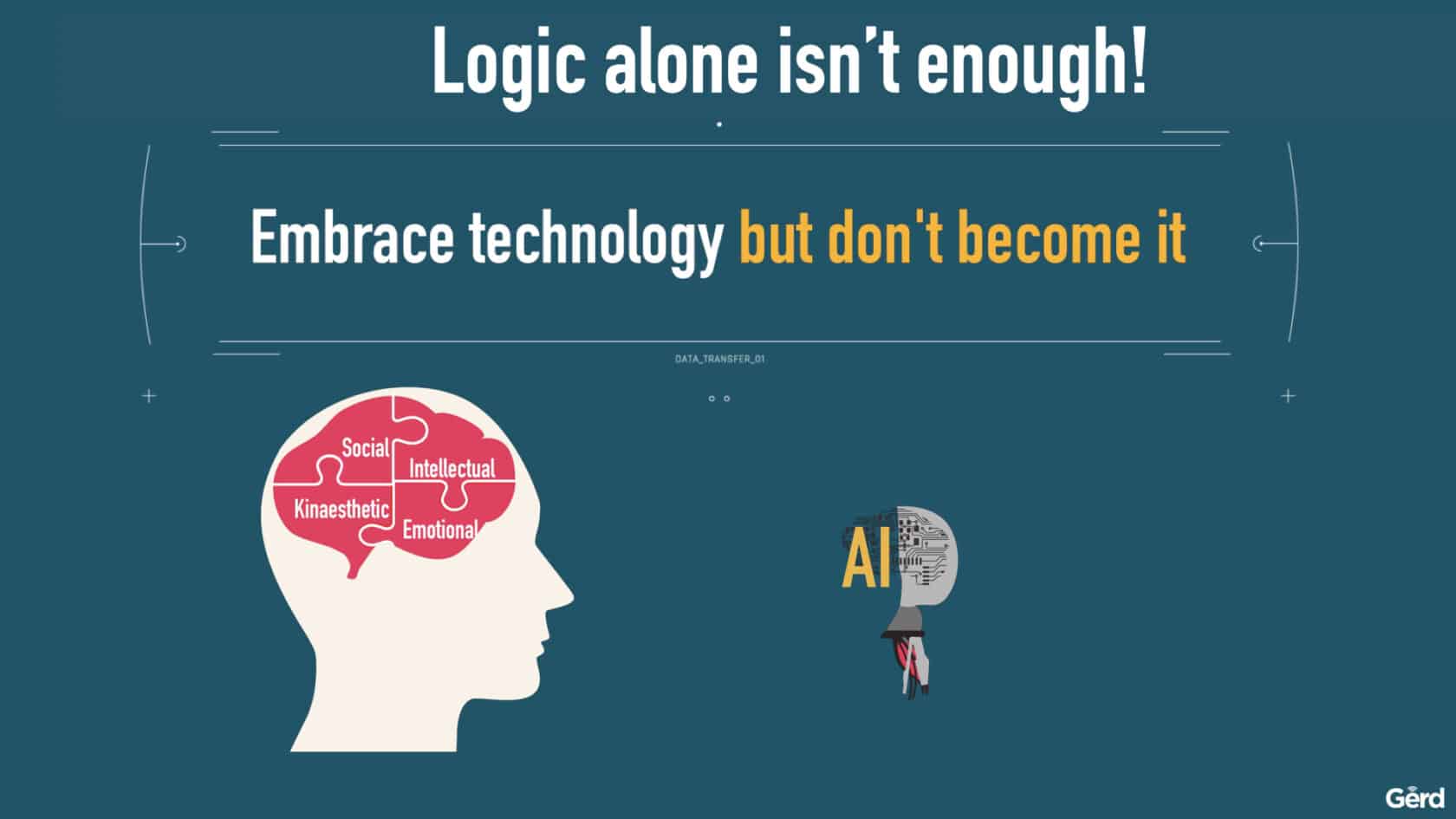

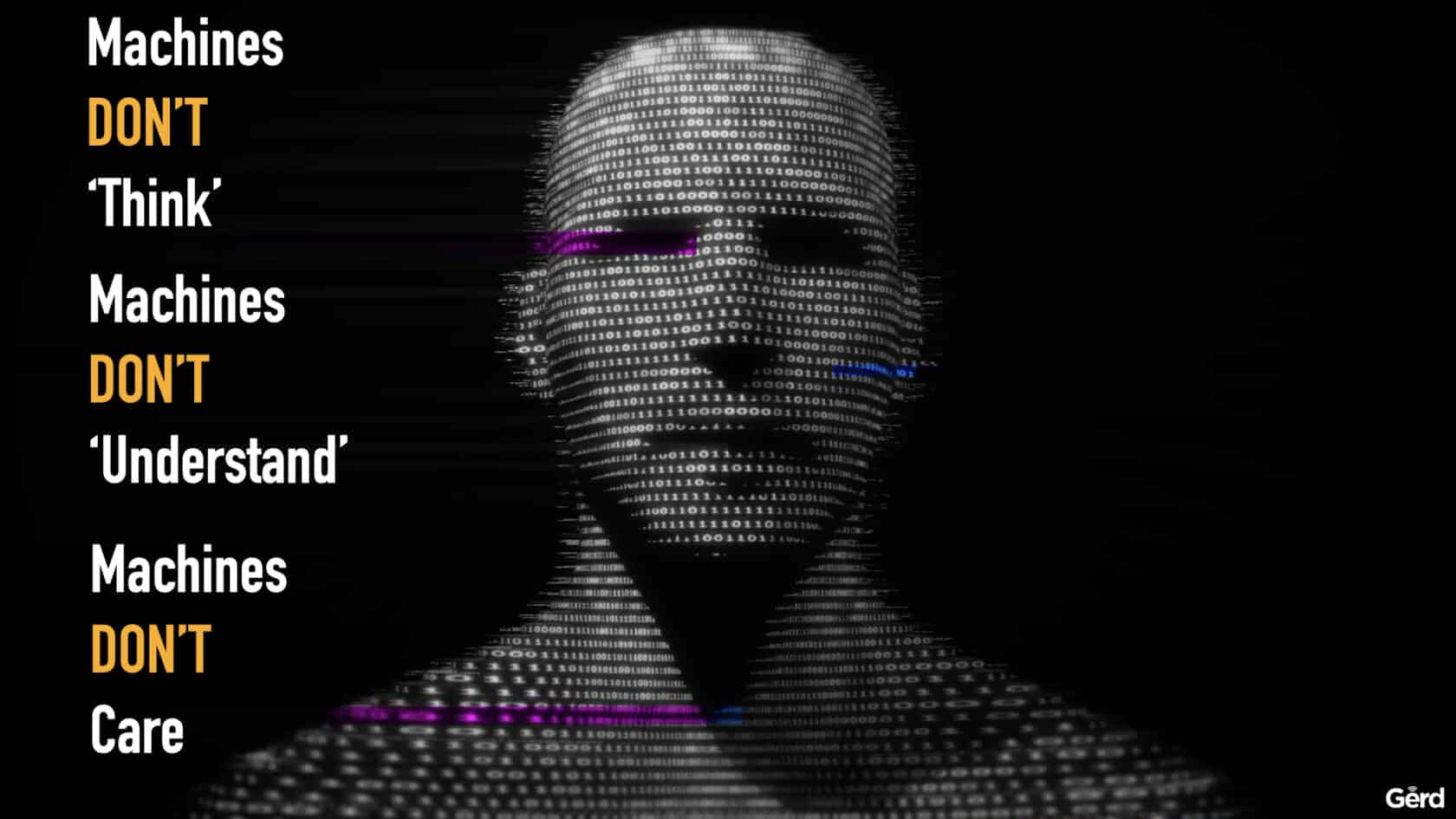

- The inevitable trust issue: Can we, will we, should we trust conversational AIs and / or applications like ChatGPT? This dilemma is similar to Google Maps, in that we love to use it but are always wondering if it's really showing us the best way to get to our destination (never mind the many different ways Google Maps has misled us, tho probably inadvertently:). The bottom line is that when we are looking for answers (not links to answers, as in search), logic alone is utterly insufficient, and deep access to data and information is not enough to generate definitive and correct answers. Simply put, the biggest issue is that AI currently lacks any what I call ‘androrithmic comprehension‘ i.e. it does not (and cannot?) know the values, concerns, feelings and ethics that underlie human decisions (and thus, human answers, stories or narratives). And let's remember Humans don't think with the brain (only) – we think with the body. A reductionist approach to real-life could quickly become a serious societal issue unless we agree on some kind of global standard that denotes the use of generative AI when we read or watch something.

- On that note, the Guinean proverb “Knowledge without Wisdom is like water in the sand” nicely describes the issue with most of ChatGPT's output. In fact, that may be the same problem that many human writers face, as well: if you have nothing to say, no original ideas, no message, no mission, no purpose… you simply end up just regurgitating what's already been said elsewhere, just in slightly different ways. This may be ok for some very simple tasks such as when writing website copy for a quickie wordpress site, or for basic dime-a-dozen real estate listings etc. But for other uses, my experience has been that ChatGPT's texts are so generic and emotionally flat that they need a lot of human editing. Nevertheless, I anticipate that many writers will use a generative AI to dig up ideas, and to poke around possibilities – which seems like a good thing (for now).

- I do think it's important to understand that ChatGPT does not (and will not?) create new knowledge (never mind what I call telos i.e. wisdom), that it is incapable of imagination and foresight (i.e. an understanding of what is not already here) and that it lacks any multinary understanding of Real Life (which is where most humans actually reside *apart from Zuckerberg). Those are pretty significant limitations – but then again, it's why us humans will not become ‘useless'🙂

- In regards to job losses due to even more technology-accelerated automation: We've heard the arguments for the inevitable ‘machines will take our jobs' meme before; as in “if a machine can drive a car (or truck), why would we need human drivers“. But so far, it hasn't really happened. Yes, we have some self-driving cars and trucks, in some places, under certain conditions, but at the same time humans still handle 99.9% of all driving jobs. Is this a fallacy, or are we barking up the wrong tree? Personally, I think levels 2 or 3 in autonomous driving would be a major achievement, and maybe level 4 and definitely level 5 is a narrative that we should to de-emphasise, for now. Maybe the meme of ‘if a machine (an AI) can write a good article, why do we need human writers‘ will follow the same trajectory – meaning that sure, if you are doing a total routine job (or task), the machine will eventually do it for you, but otherwise real life just isn't that simple. For now.

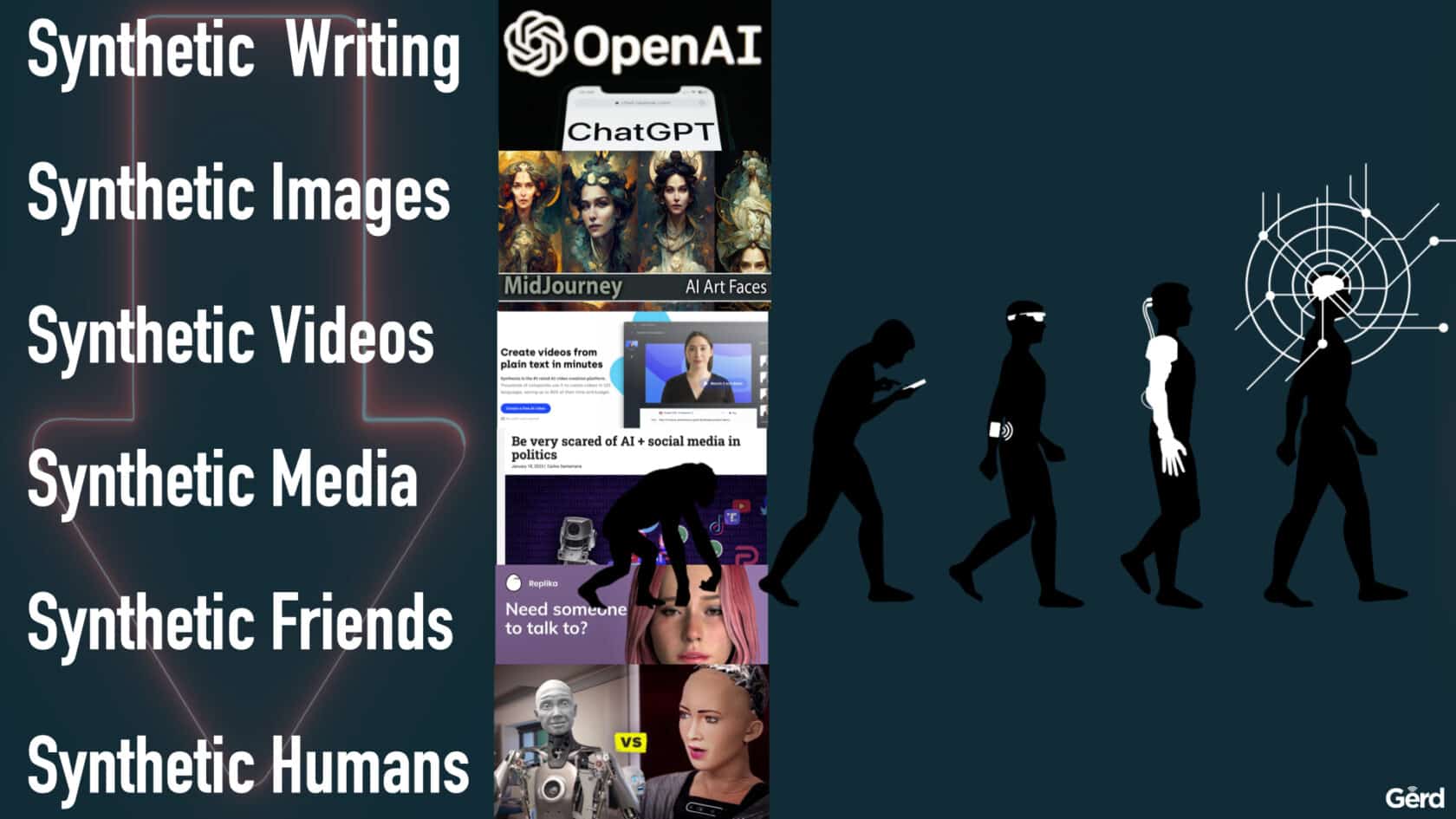

- That brings me to my biggest concern: where is this going? Are we heading into a bizarre kind of ‘Synthetic Future‘? First, synthetic writing, then, synthetic images and videos, then, synthetic media (films, podcasts and music) followed by synthetic friends (have a look Replika), and finally, synthetic humans and a … synthetic world. There is a distinct danger in that we may find it increasingly appealing to our laziness to delegate a lot of the ‘grunt work' (or any less exciting work, for that matter) to machines, and that we may stop making an effort, ourselves. Why write your guts out for a great news-piece when AI can generate it in seconds (again, apples and oranges but still a huge issue). Why bother with making your photos look good when AI can generate any realistic scene on-demand, on any location around the globe? Why bother with all the challenges of a real-life relationship with another human, if we can get a WifeLike AI? Mind you; some laziness is often welcome and convenient (such as Google Maps or Uber-Eats, maybe?) but taken to the extreme it would lead to an increased dehumanisation of society and a general lack of meaning, imho. I shutter, again.

- A utopian world, made possible by AI? Says OpenAI's CEO, Sam Altman: “As AI produces most of the world’s basic goods and services, people will be freed up to spend more time with people they care about, care for people, appreciate art and nature, or work toward social good”. I tend to agree that technology could possibly create those protopian benefits for us, but in the end, technology will not solve social, cultural or political problems – for that, we need human collaboration and wisdom, and that is what we are missing the most!

“We will see ChatGPT and tools like it used in adversarial ways that are intended to undermine trust in information environments, pushing people away from public discourse to increasingly homogenous communities.”

Janet Haven, Exec Director, Data & Society, via Nieman Lab